A deep dive into mathematics for more accurate AI

12 December 2024

That computers have become so good at image recognition is largely due to an AI technique called deep learning. This allows a computer to distinguish a cat from a dog pretty well. But as image recognition becomes more widely used, for example in self-driving cars, the demands on accuracy are getting higher and higher. For example, being able to distinguish a cat from a dog may not be so important for a self-driving car, but distinguishing a fatbike from a regular bike can be crucial, because of the speed difference. But in this case, deep learning is still failing a bit, and there is a fundamental mathematical problem underlying this, says Pascal Mettes, associate professor at the Video & Image Sense Lab (VIS Lab) of the Informatics Institute of the UvA.

Errors in object recognition

‘If a human sees a picture of a lynx, and he says: that's a cat, you understand the error, because those animals look alike. But if he says: that's an elephant, you think: he really doesn’t understand anything, and you never ask that person anything about animals again. You then no longer trust that person's knowledge.’ Deep learning algorithms currently make the same mistake: they can make incomprehensible errors in object recognition and that makes them less reliable. This is because of the way these algorithms classify objects and the hierarchy they apply. This differs from the way humans do it. For example, we do it as follows: apple tree - fruit tree - tree - flora - living organism. Deep learning networks have taught themselves in a very different way how to classify an apple tree, and that classification is unknown to humans.

Euclidean space

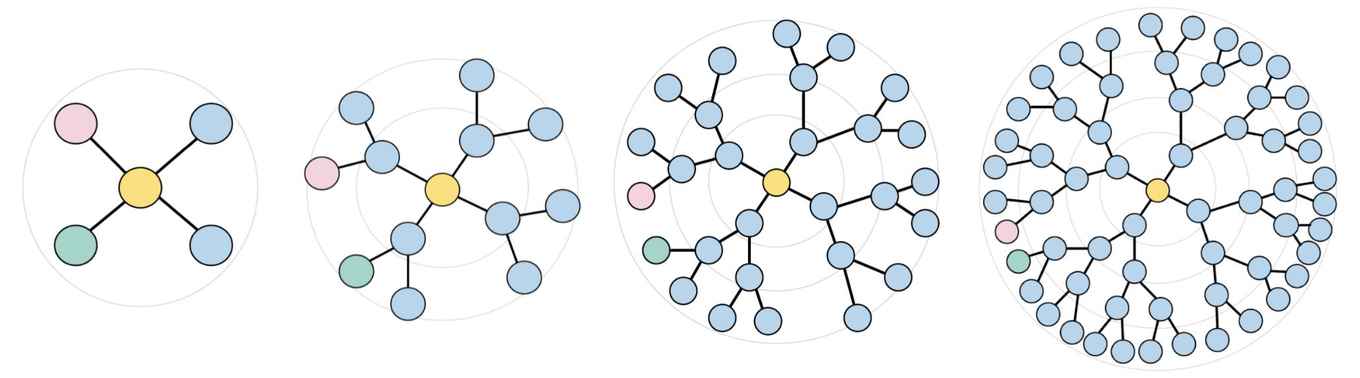

On top of that, the network is built in an abstract world that computer scientists call a ‘latent space’. In this, proximity between objects that fall into the same category arises naturally, but there is also sometimes a great distance between objects that actually belong closer together, such as a cat and a lynx. And this has to do with the fact that these networks are represented in a Euclidean space. ‘You learn the principles of Euclidean space in high school mathematics. For example, that the shortest path between two points is a straight line, and that the sum of the three angles in a triangle is 180 degrees. But in the real world, these principles don't always apply. If I ask you the shortest distance from here to New York, it is a curved line, because otherwise you would have to go through the earth.’ In short, the hierarchies used by deep learning work exponentially (you start with one main category and exponentially many subcategories are added), while the Euclidean space in which the network resides grows linearly. ‘That is suboptimal. You need to have a space that grows exponentially along with it.’

Hyperbolic geometry performs better

To make more accurate classifications, hyperbolic geometry could be a solution. This does not mean that the entire deep learning network would have to be rebuilt, Mettes thinks. ‘Only the last and most fine-grained part, where you name a subspecies, for example, could be done hyperbolic.’ In a proof-of-concept, Mettes and colleagues have already shown that networks based on hyperbolic geometry perform better in making a hierarchical classification than normal networks.

Mettes wants to apply these modified networks initially in the biological domain. ‘Especially in this domain, there can be a lack of examples. Pictures of sheepdogs are plentiful on the internet, but not of a wild tiger or a rare plant. A traditional deep learning network then has great difficulty in classifying such an object properly.’ Mettes also expects hyperbolic geometry to be a solution in scientific research. ‘Think of medicine research or recognising new molecules in chemistry. An algorithm could quickly figure out whether a certain combination of molecules is toxic or not.’ Not only the semantics, the naming of an object, can be hierarchical in this respect, but also the object itself, such as a molecule composed of several atoms. Language models, such as ChatGPT, could also perform better using hyperbolic geometry, Mettes thinks, and would then make fewer errors.

It would make me very happy if this technique is integrated into deep learning in five years' time. So all a software developer has to do is tick the hyperbolic geometry box in the code, and then it will be taken care ofPascal Mettes

Broadly applicable

Mettes, not a mathematician himself but trained as a computer scientist, realises that his field is still very small-scale. Only a few teams worldwide are working on applying hyperbolic geometry to deep learning. ‘A few years ago, everyone thought: what are you dabbling in. But in recent years, several scientific papers have been published so the field is now taken a bit more seriously. It is also becoming increasingly clear that it is broadly applicable, so not just in the field of image recognition. But I do notice that I need to keep advocating it. So I give tutorials at conferences and invite foreign researchers to do joint research.’

Mettes is mainly interested in the application of the underlying mathematics for deep learning, and not in the fine details of mathematical geniuses such as Euclid and Poincaré. Just like the Dutch artist MC Escher, who visualised hyperbolic geometry with Circle Limit IV, for example, but did not understand mathematician Coxeter's explanation. According to Mettes, those who will later apply his adapted networks in, say, a biological or chemical database, also wouldn’t need this profound knowledge. ‘It would make me very happy if this technique is integrated into deep learning in five years' time. So all a software developer has to do is tick the hyperbolic geometry box in the code, and then it will be taken care of.’

Video & Image Sense Lab (VIS Lab) of the UvA