Discovering a host of data sources

28 November 2024

How can we make specific expert knowledge, which is in researchers' heads, suitable for analysis by a computer? ‘In our heads, we have a certain way of describing or categorising things, but a computer does not understand that. For example, a researcher knows how new animal species are identified, and a sociologist knows how new research questions about our society are created, but a computer doesn’t know this.’ For this, a computer must learn to make semantic connections, i.e. not just know what main and subcategories people use, but also be able to make all kinds of other classifications. If computers get access to that knowledge, research will become more reproducible, and many scientific discoveries come within reach, Stork believes.

Social history and biodiversity

In a nutshell, this is what Lise Stork has been working on for some time: making (implicit) scientific knowledge available to computers so that it provides new insights, and gives scientists new starting points for research. Stork recently started a new position as assistant professor of data science at the INDElab of UvA's Informatics Institute, where she is continuing this research. In doing so, she plans to focus on different scientific topics, such as social history or biodiversity, for example, subjects that also interest her personally. ‘I have always found the natural world very fascinating. It sometimes feels a bit like a hobby to be involved in geology and animals, for example. And it's great fun to see how natural historians work. They are already working a lot on creating advanced data infrastructures so that data can be integrated and better used.’ By making biodiversity knowledge machine-readable, conclusions can be drawn that are also of interest to other fields, such as climate studies. ‘Social history is also very interesting, because in that field, study variables are often social constructs. How do you define what an occupation is, and how is that related to social status? Researchers at the International Institute of Social History, which I work with, are already working to define that in computer-readable language.’

Field books

History is in particular a field in which contextual knowledge is very important. Stork already discovered this when she conducted doctoral research on the subject in collaboration with museum Naturalis in Leiden. Her goal then was to make computers understand relationships between various, multimodal sources. Think of historical field books, in which animal descriptions on expeditions were noted. ‘A researcher who went to Indonesia in the nineteenth century, for example, would note in his field book: I found this bat today. By making meta-information about those field books machine-readable, links between multimodal sources can be made and such a field book regains context. Who wrote it? In which period? Where was the person? Were local people involved in the search?’

When we make scientific knowledge more machine actionable, we enable researchers to build on existing knowledgeLise Stork

Stork's work goes beyond digitising information. ‘A lot of time and effort is often put into digitising the content of such a field book, for example. But the first step is actually to put it online with the meta-information attached. People can then work with the content themselves and make connections.’

Knowledge engineering

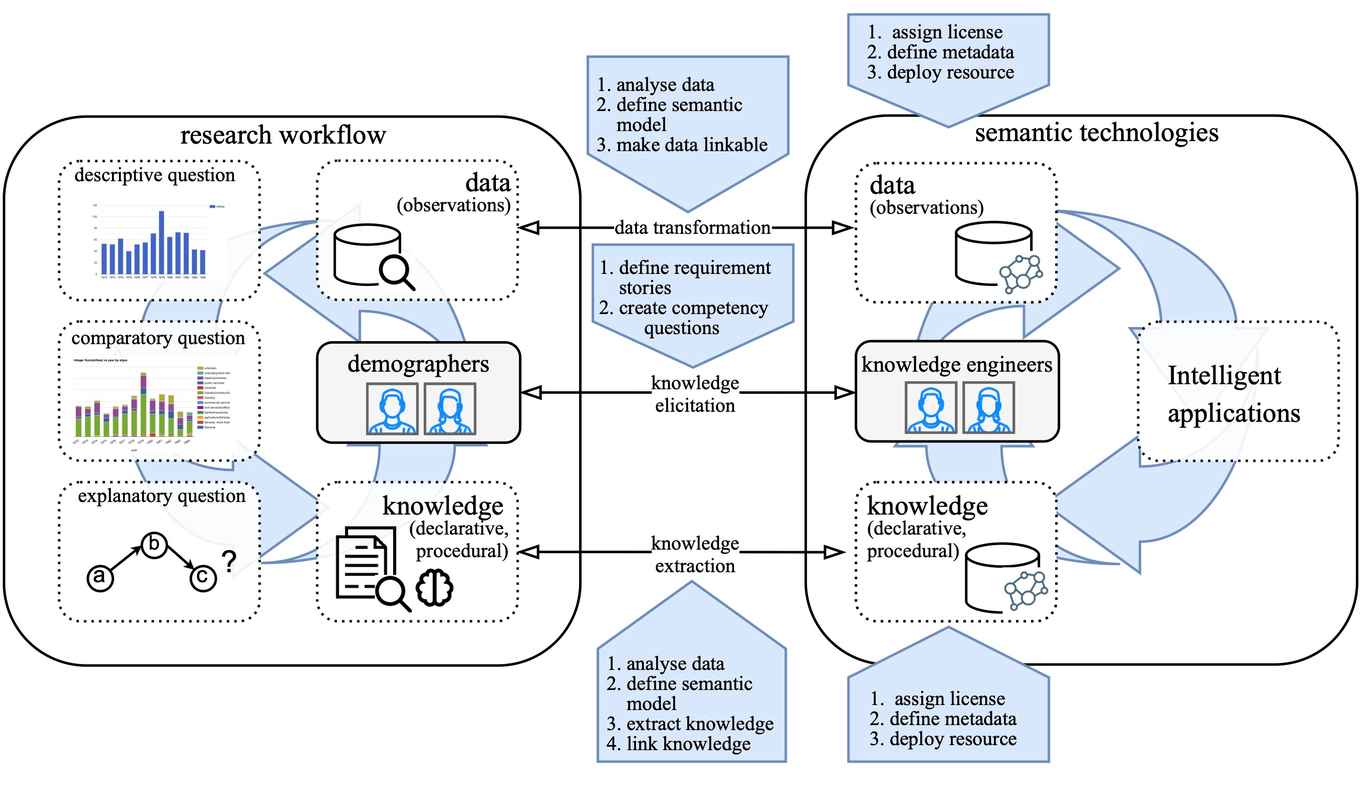

In her research at the UvA, Stork aims to investigate the best ways to acquire (tacit) knowledge of subject areas (domains), for example from domain experts or knowledge written down in texts. This field is also known as knowledge engineering. Knowledge is converted into a language that a computer understands, and can then be used for scientific tasks.

She wants to set up this research together with a number of PhD students, but also with experts from different scientific fields. The research starts with literature analysis: how do knowledge engineers already work together with domain experts, and what are the bottlenecks? Stork also wants to look specifically at community platforms on which scientists collaborate, such as Githubs, blogs and wikis. There, she wants to find out how the data pipeline, or the whole process from scanning sources, annotating and building a data model to analysis, works. ‘In addition, I would also like to investigate whether parts of this process can be automated, for example using AI-based language models.’

An important guide for her research is the FAIR framework. FAIR is an initiative to make data more findable, accessible, interoperable and reusable. ‘When we make scientific knowledge more machine actionable, we enable researchers to build on existing knowledge.’

More information INDElab