When will robust AI-supported medical imaging finally become a reality?

1 June 2023

Using AI to screen patients for certain diseases can be super accurate in the lab, yet fail in the real world of healthcare. UvA PhD student Coen de Vente is looking for solutions to this problem. Within the Informatics Institute, De Vente is researching machine learning techniques for screening of eye diseases. He works in the Quantitative Healthcare Analysis (qurAI) group of Professor Clarisa Sánchez Gutierrez.

How is it that AI techniques for medical imaging are accurate in the lab, yet fail in practice?

‘Essentially, it is due to a lack of robustness. AI models are trained on a dataset. When that dataset is not sufficiently representative of the patients being screened in practice, then a patient who does have a condition may be told that nothing is wrong, or vice versa: a patient is diagnosed with a disease when nothing is wrong.’

So what are the problems with datasets?

‘Sometimes it is the fact that certain ethnicities are too few in the data, for example, too much data from white people and not enough from black people. But problems also arise because different types of scanners give different types of artifacts in their images, or because the conditions under which a scan is made are slightly different in one hospital than in another. With eye scans, that can be caused by slightly different lighting conditions.’

How are you trying to solve these kinds of problems?

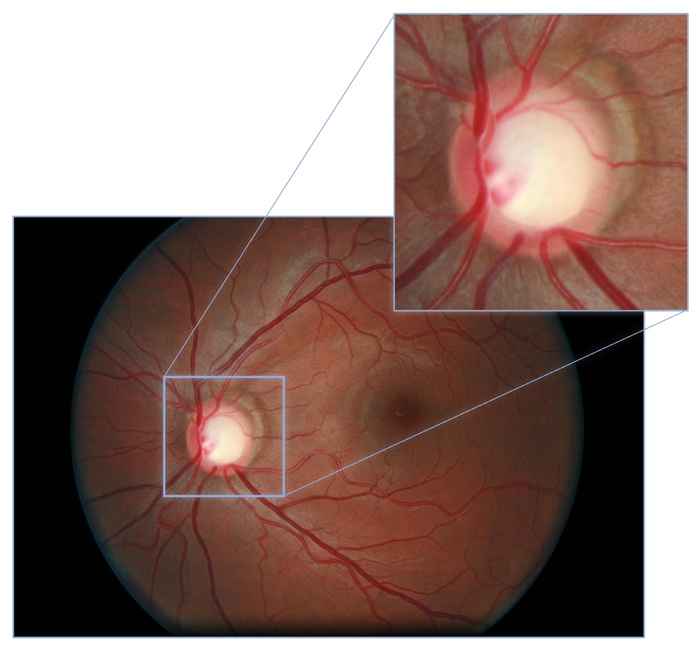

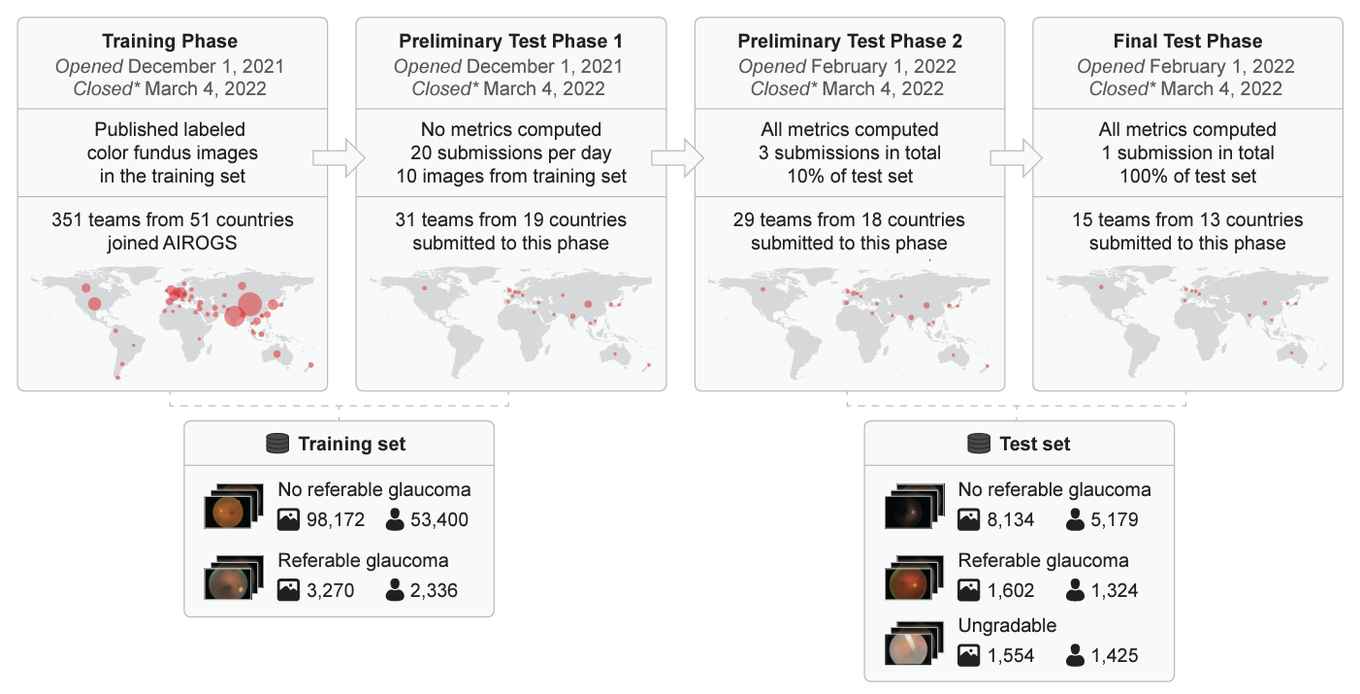

‘Between December 2021 and March 2022, we organized a competition together with Het Oogziekenhuis Rotterdam: the Artificial Intelligence for RObust Glaucoma Screening challenge, AIROGS for short. A glaucoma is an eye disease in which part of the visual field is lost due to increased eyeball pressure. Early detection can prevent a lot of misery, and automated screening with AI can help. The challenge for the teams that participated in our competition was to build AI software in just over three months time that can, on the one hand, detect whether someone has glaucoma or not and, on the other hand, also indicate whether or not a particular scan is readable. Our dataset consisted of 113,000 images of about sixty thousand patients, from five hundred different screening centers in the US.’

And what was the result?

‘We evaluated the solutions of fourteen teams. The best teams performed similarly to a team of ophthalmologists and optometrists. Many of the algorithms also provided robust performance when tested on three other publicly available datasets. These results demonstrate the feasibility of robust AI-supported glaucoma screening.’

Your dataset comes from the US. Can we then use the best AI screening model in the Netherlands without any problems?

‘I would still be cautious about that. If we were to apply this algorithm in Dutch hospitals, we don’t know for sure whether our American training data are sufficiently representative of the Dutch context. You don’t want the algorithm to unexpectedly do something completely wrong. That’s why there is strict regulation before AI software can be used in medical practice. There is no AI software approved in the EU or the US for glaucoma screening yet. There does exist other software that has received approval for applying AI in ophthalmology, such as software that autonomously detects diabetic retinopathy. This has received approval both in the US by the FDA and in the EU with a CE mark.’

What remains to be done to bring these types of AI screening techniques closer to patient care?

‘As researchers, we need to do a lot of work on setting up large studies. Only then can we develop robust AI screening techniques. There are still major challenges in terms of the interpretability of the results. Ideally, we would like the AI system to be able to explain its results and also let us know if it doesn’t know. And what is the best way for the system to let a physician know its results? That’s another challenge. And finally, of course, we have to look at cost-effectiveness.’