PhD student improves image classification with just one extra line of code

24 November 2022

Imagine a large number of medical scans from which doctors want to know which ones show a tumor and which ones don’t. Quite probably the training data contain many more scans without a tumor than with a tumor. When an automatic image classification system is trained on such a biased dataset, and no extra measures are taken, the chances are high that it under diagnoses tumors, which of course is undesirable.

Generally speaking, the less a particular example appears in a dataset, the more it is ignored by current day machine learning systems. And as many data sets in practice are out of balance, many applications unfairly ignore data classes that only contain a few examples.

Paper selected

In her first year, PhD-student Tejaswi Kasarla from the Video & Image Sense Lab VISof the UvA Informatics Institute developed an elegant and unconventional solution to this problem. Together with five colleagues she described the solution in a paper that got accepted and selected as an oral presentation for the prestigious Conference on Neural Information Processing Systems NeurIPS, which is being held form 28 November to 4 December 2022 in New Orleans (USA).

Our solution gives equal importance to all classes in the dataset depending on whether a class contains many or only a few examplesTejaswi Kasarla

'Our solution gives equal importance to all classes in the dataset', says Kasarla, 'not depending on whether a class contains many or only a few examples. The solution adds prior knowledge to the data. If you know the number of classes, then our algorithm tells you exactly how to equally divide the classes.'

Kasarla and her co-authors tested the solution in three cases, including imbalanced settings and settings containing images from other distributions. Kasarla: 'For all three test cases our solution performed significantly better than the best available alternative method. For example, when we tested our method on the widely used CIFAR-dataset, we had a gain of twelve percent points in the case that the largest class contained a hundred times more examples than the smallest class. The more imbalanced a dataset is, the more benefit our solution gives.'

Extra line of code

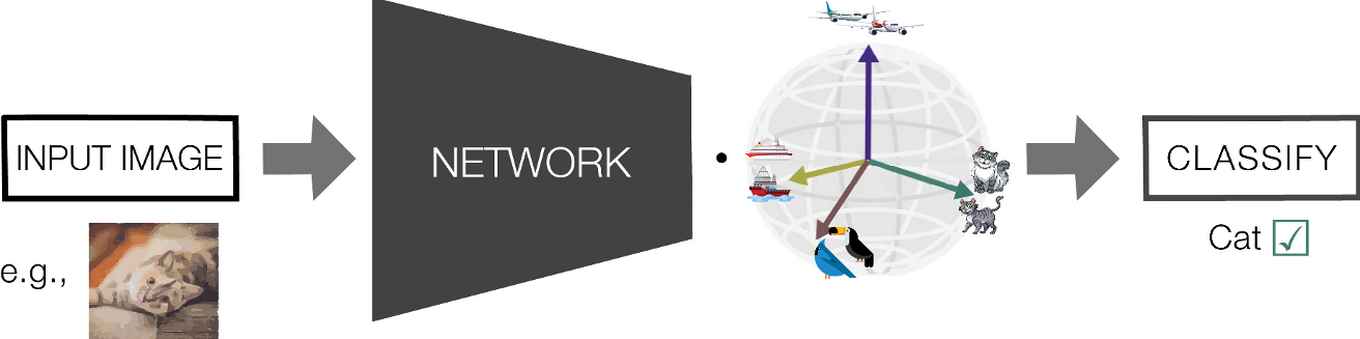

Surprisingly enough, the solution is based on adding only one extra matrix multiplication to the final layer of the deep learning neural network, says Kasarla’s PhD-advisor and UvA assistant professor Pascal Mettes: 'The solution is general purpose and can be implemented in only one extra line of code. That extra line doesn’t even lead to a measurable increase in runtime of the computer program.' Kasarla already received many positive reactions from international colleagues who want to use her simple solution.

The norm for PhD students in our group is to have four papers published after four years time publishing, a paper that is accepted in a top conference during the first year of your PhD is quite specialPascal Mettes

The real, everyday world not only contains a huge variety in image classes and sizes of the classes, new image classes are also constantly appearing, for example because new products enter the market. 'Our method easily allows for adding new classes', says Kasarla, 'so we think that it could be very useful for machine learning systems that continuously have to learn about new classes, so called continual learning.' It will be one of the directions for her future research during the rest of her PhD-study.

'The norm for PhD students in our group is to have four papers published after four years time', says Mettes, 'but publishing a paper that is accepted in a top conference during the first year of your PhD is quite special.'

Details of the publication

Tejaswi Kasarla, Gertjan J. Burghouts, Max van Spengler, Elise van der Pol, Rita Cucchiara, Pascal Mettes

Title 'Maximum Class Separation as Inductive Bias in One Matrix.'